(credit: Kris Connor/ Getty Images)

I grew up in a low-tech household. My dad only replaced something if it caught fire. We owned about 15 cars (mostly Humber 80s), and 13 of them were used to keep the other two running. Same story for tractors and any other farm equipment you care to name. Dad’s basic rule was that if he couldn't repair it, we didn't need it. We weren't anti-technology, but technology had to serve a purpose. It had to work reliably or at least be fun to repair.

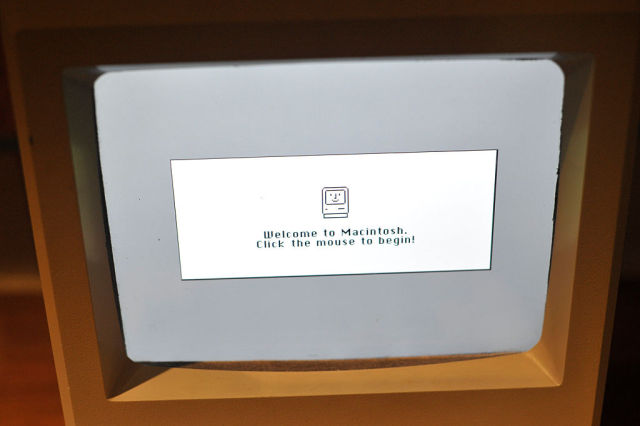

Then I decided I wanted a computer. Much saving ensued, and after a while I was the proud owner of a secondhand Commodore VIC-20, with an expanded memory system, advanced BASIC, and a wonky tape drive... and no TV to plug it into. After begging an old black-and-white television from family friends, I was set for my computing adventures. But they didn't turn out as planned.

Yes, I loved the games, and I tried programming. I even enjoyed attempting to make games involving weird lumpy things colliding with other weird lumpy things. But I never really understood how to program. I could do simple things, but I didn't have the dedication or background to go further. There was no one around to guide me into programming, and, even worse, I couldn't imagine doing anything useful with my VIC-20. After a couple of years, the VIC-20 got packed away and forgotten.